Researchers at Carnegie Mellon University’s Robotics Institute have proposed a new computational technique that could allow robots to better understand and handle fabrics.

The technique is based on the use of a tactile sensor and a simple machine-learning algorithm, known as a classifier.

“We are interested in fabric manipulation because fabrics and deformable objects in general are challenging for robots to manipulate, as their deformability means that they can be configured in so many different ways,” said Daniel Seita, one of the researchers who carried out the study.

“When we began this project, we knew that there had been a lot of recent work in robots manipulating fabric, but most of that work involves manipulating a single piece of fabric.

“Our paper addresses the relatively less-explored directions of learning to manipulate a pile of fabric using tactile sensing.”

Most existing approaches to enable fabric manipulation in robots are reportedly based on the use of vision sensors, such as cameras or imagers that only collect visual data.

According to researchers, while some of these methods achieved good results, their reliance on visual sensors may limit their applicability for simple tasks that involve the manipulation of a single piece of cloth.

However, the new method devised by Seita and his colleagues Sashank Tirumala and Thomas Weng uses data collected by a tactile sensor called ReSkin, which can infer information related to a material’s texture and its interaction with the environment.

Using this tactile data, the team trained a classifier to determine the number of layers of fabric grasped by a robot.

“Our tactile data came from the ReSkin sensor, which was recently developed at CMU last year,” said Weng.

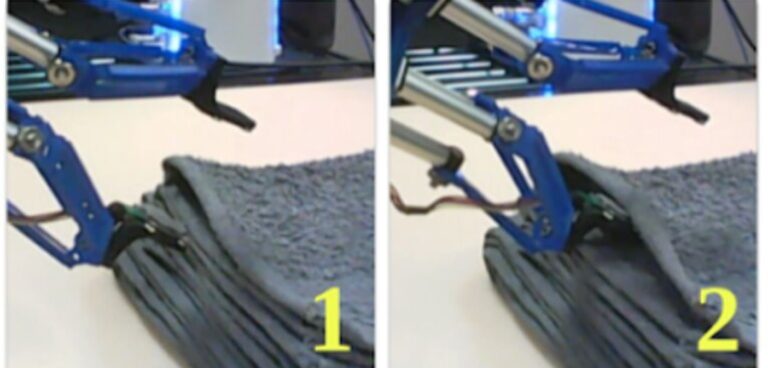

“We use this classifier to adjust the height of a gripper in order to grasp one or two top-most fabric layers from a pile of fabrics.”

To evaluate their technique, the team carried out 180 experimental trials in a real-world setting, using a robotic system consisting of a Franka robotic arm, a mini-Delta gripper and a Reskin sensor – integrated on the gripper’s ‘finger’ – to grasp one or two pieces of cloth in a pile.

The approach reportedly outperformed baseline methods that do not consider tactile feedback.

“Compared to prior approaches that only use cameras, our tactile-sensing-based approach is not affected by patterns on the fabric, changes in lighting, and other visual discrepancies,” Tirumala said.

“We were excited to see that tactile sensing from electromagnetic devices like the ReSkin sensor can provide a sufficient signal for a fine-grained manipulation task, like grasping one or two fabric layers.

“We believe that this will motivate future research in tactile sensing for cloth manipulation by robots.”

In the future, the researchers hope the manipulation approach could help to enhance the capabilities of robots designed to be deployed in fabric manufacturing facilities, laundry services, or in homes.

Specifically, they believe it could improve the ability of these robots to handle complex textiles, multiple pieces of cloth, laundry, blankets, clothes, and other fabric-based objects.