An engineering team from the University of Columbia has built a robot that can reportedly create a model of its body without human support.

The study shows how the robot created a kinematic model of itself and then used the self-model to plan movements, reach targets and avoid barriers in a variety of situations. It also recognised damage to its own form.

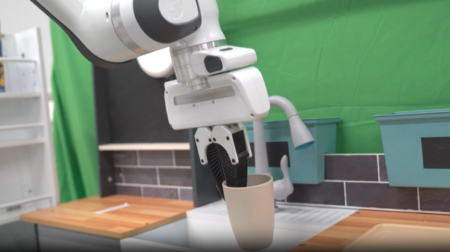

The researchers placed a robotic arm inside a circle of five cameras, which the machine used to watch itself as it moved. The robot did this to understand how its body responded to a range of commands.

After about three hours, the robot stopped, as its internal network had finished learning about its motor actions and the volume it occupied in the environment.

“We were really curious to see how the robot imagined itself,” said Hod Lipson, professor of mechanical engineering and director of Columbia’s Creative Machines Lab, where the experiments were conducted.

“But you can’t just peek into a neural network; it’s a black box.”

After the researchers struggled with ways to understand the network, the self-image gradually emerged.

“It was a sort of gently flickering cloud that appeared to engulf the robot’s three-dimensional body,” said Lipson.

“As the robot moved, the flickering cloud gently followed it.”

The robot’s self-model was accurate to about 1% of its workspace.

The ability of robots to model themselves without assistance could reportedly save labour and allow them to detect and compensate for their own damage.

The authors believe it is important to develop more self-reliant robotic solutions.

The work is part of Lipson’s decades-long research into robotic self-awareness.

The researchers are aware of the risks and controversies surrounding self-aware machines, but suggest that the level of awareness is trivial compared to a human’s capacity.