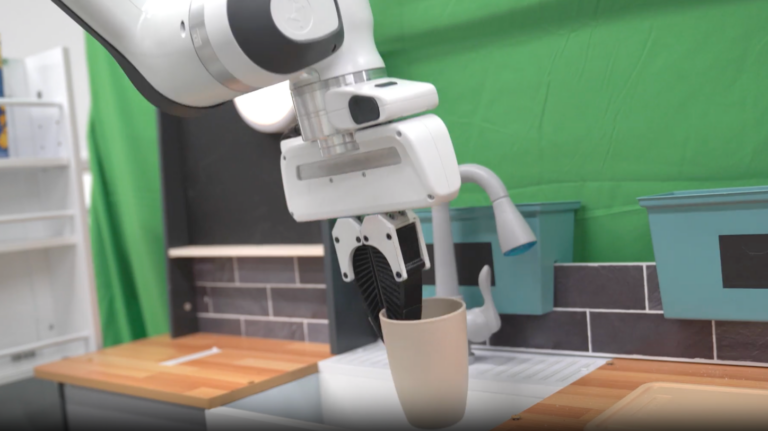

Researchers at Cornell University have developed a robot which uses artificial intelligence (AI), to complete tasks by observing how-to videos.

The system, known as RHyME (Retrieval for Hybrid Imitation under Mismatched Execution), enables robots to perform complex activities after watching a single instructional video.

READ MORE: Outreach Robotics works with environmental researchers to collect rare plant in Hawai’i

Unlike traditional methods that require extensive, precise, and repetitive data collection, RHyME allows robots to use their ‘memory’ and previous visual experiences to complete tasks, even when execution does not match the demonstration perfectly.

Robots traditionally struggle when faced with unexpected scenarios or slight deviations from their training data.

RHyME tackles this by enabling robots to retrieve relevant information from previously viewed videos to complete tasks, thereby increasing their flexibility and robustness.

In lab settings, robots trained with RHyME required only 30 minutes of task-specific data and demonstrated more than a 50% improvement in task success rates compared to earlier methods. The system has been designed to reduce the reliance on large amounts of robot-specific data, which has historically been a barrier to scaling robotic applications.

The project was developed by a team from Cornell’s Ann S. Bowers College of Computing and Information Science and was supported by funding from Google, OpenAI, the US Office of Naval Research, and the National Science Foundation.

Kushal Kedia, a doctoral student in the field of computer science and lead author of a corresponding paper on RHyME, said: “One of the annoying things about working with robots is collecting so much data on the robot doing different tasks.

“That’s not how humans do tasks. We look at other people as inspiration.”

Adding, Sanjiban Choudhury, assistant professor of computer science in the Cornell Ann S. Bowers College of Computing and Information Science, said: “This work is a departure from how robots are programmed today.

“The status quo of programming robots is thousands of hours of tele-operation to teach the robot how to do tasks. That’s just impossible. With RHyME, we’re moving away from that and learning to train robots in a more scalable way.”

Achievements and innovations in retail and e-commerce, healthcare and pharmaceuticals, food and beverage, automotive, transport & logistics, and more will be celebrated at the Robotics & Automation Awards on 29 October 2025 at De Vere Grand Connaught Rooms in London. Visit www.roboticsandautomationawards.co.uk to learn more about this unmissable event for the UK’s robotics and automation sectors!