Google DeepMind has unveiled two AI models, Gemini Robotics and Gemini Robotics-ER, based on its Gemini 2.0 framework.

These models are designed to expand the capabilities of robots by enabling them to perform more complex actions, adapt to new environments, and enhance collaboration with humans.

Gemini Robotics represents an advanced vision-language-action (VLA) model that integrates physical actions as an output modality for controlling robots. Built on the Gemini 2.0 platform, the model is designed to perform a wide range of tasks, including novel and untrained activities. It performs in three areas: generality, interactivity, and dexterity.

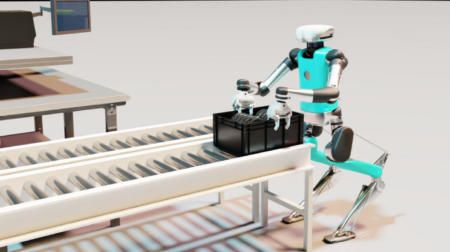

These qualities allow robots powered by Gemini Robotics to understand and react to various situations, interact with their environment seamlessly, and manipulate objects with high precision. This progress is seen as a major leap towards building general-purpose robots that can operate effectively in diverse settings.

In addition to its improved performance, Gemini Robotics can be adapted to different robot types, from simple bi-arm platforms to more complex humanoid robots like Apptronik’s Apollo.

Gemini Robotics-ER, the second model introduced, focuses on enhancing spatial reasoning capabilities for robots. By building on the foundation of Gemini 2.0, this model improves object detection, 3D vision, and spatial understanding. I

t allows robots to perform tasks such as grasping objects with appropriate precision and determining safe actions based on their environment. Gemini Robotics-ER is designed for roboticists to integrate it with their existing low-level controllers, offering enhanced embodied reasoning to address real-world challenges.

Both models are part of Google DeepMind’s ongoing collaboration with robotic companies, including Apptronik, to build next-generation humanoid robots. The introduction of Gemini Robotics and Gemini Robotics-ER opens up new possibilities for the robotics industry, with the potential to enhance robot capabilities in various sectors, from healthcare to logistics.

In line with its commitment to responsible AI development, DeepMind is also prioritising safety in robotics. The company has implemented frameworks to ensure that robots act safely and responsibly, including a newly released dataset focused on improving semantic safety in embodied AI.

Additionally, Google DeepMind is consulting with external experts and adhering to rigorous safety standards to address potential risks associated with advanced robotic capabilities.

As part of its efforts to further the field, Gemini Robotics-ER will be tested by trusted partners, including Agile Robots, Boston Dynamics, and Agility Robotics, as they explore the model’s potential for real-world applications. DeepMind looks forward to continuing its research and refining its models for future deployment in diverse robotic systems.

Join more than 11,000 industry leaders at Robotics and Automation Exhibition on 25-26 March 2025, at the NEC Birmingham to explore cutting-edge technologies, connect with peers and discover the latest innovations shaping the future of manufacturing, engineering and logistics. Register for free now to secure your place at this premier event!