Artificial intelligence is on track to wholly alter the future of healthcare. With growing integration of the technology into the work of medical professionals, society can soon expect dramatic changes in patient health outcomes, writes Katie Searles…

According to the latest statistics provided by Cancer Research UK, there are more than 166,000 cancer deaths in the UK every year. That’s more than 450 every day. Meanwhile, heart and circulatory diseases account for around 25% of all deaths in the UK, totalling more than 168,000 deaths annually – an average of one every three minutes – while 34,000 deaths can be attributed to strokes in the UK each year, according to the British Heart Foundation (BHF). What’s more, the Office for National Statistics states that, in 2019, some 66,424 out of 530,841 deaths registered in England and Wales were due to dementia and Alzheimer’s disease.

It makes for grim reading, especially when considering that the NHS says one in two people in the UK will develop some form of cancer during their lifetime, Alzheimer’s Research UK says one in three people born in Britain this year will develop dementia in their lifetime, and the BHF says more than half of the country’s population will get a heart or circulatory condition in their lifetime.

But what if these life-threatening conditions, and others, could be detected more accurately and in their early stages? Fortunately, artificial intelligence (AI) is getting increasingly sophisticated at doing what humans do, but more efficiently, more quickly and at a lower cost. For example, according to the American Cancer Society, a high proportion of mammograms yield false results, leading to one in two healthy women being told they have cancer. However, the use of AI is enabling review and translation of mammograms 30 times faster with 99% accuracy, reducing the need for unnecessary biopsies.

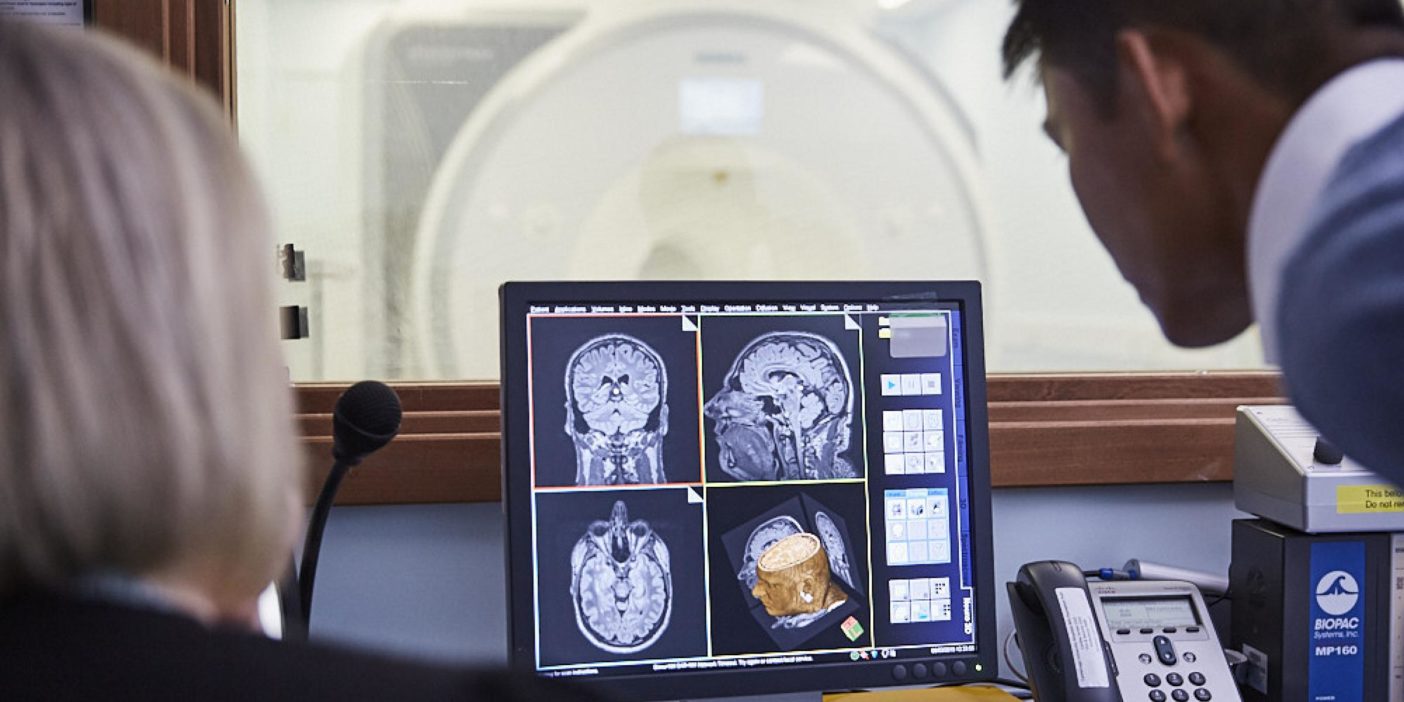

What’s more, diagnostic patient data from X-rays, ECGs and MRIs can be analysed with the help of machine-learning tools so that the aforementioned diseases that bring so much sadness and heartache to so many people’s lives can now be detected at very early stages based on extremely subtle – and hitherto unnoticed – changes. In the UK, a joint study by Imperial College London and University College London is evaluating the use of an AI-powered system to identify areas of the eye that is showing signs of geographic atrophy (GA) – a common condition that can cause reduced vision or blindness. In Cambridge, researchers have developed an AI approach to diagnosing dementia from a single brain scan, having also created wearable devices that can identify the early warning signs of Alzheimer’s some 20 years sooner than traditional methods.

According to Francesca Cordeiro, professor of ophthalmology at Imperial College London, AI can now be harnessed to identify both life-threatening and life-changing diseases because the technology can analyse more than structural changes and provide a much deeper view at a cellular level.

Likewise, Dr Laura Phipps of Alzheimer’s Research UK describes AI algorithms as having the ability to behave “like a super hardworking, super-experienced clinician. These AI algorithms would have seen thousands and thousands of cases – more than a clinician probably could see in their working life”.

Vital signs

It is this ability to process huge quantities of patient files and scans that is being routinely collated at healthcare facilities and enabling research projects to develop AI-powered models that can identify diseases quickly. With the capacity to study a vast array of clinical data over and over again these AI projects are rapidly speeding up the diagnostics process. For example, Cordeiro work has resulted in glaucoma being identified 18 months earlier than normal and wet macular degeneration (a chronic eye disorder that causes blurred vision or a blind spot in a person’s visual field) identified 36 months sooner. “AI is key to this because it gives you a robust and scalable way that is reproducible,” she says.

Data is used not only to train algorithms to spot early warning signs of disease but also to help validate such methods and optimise parameters of various scenarios, thereby enabling high levels of test repeatability. Zoe Kourtzi, a researcher at the University of Cambridge and a fellow at The Alan Turing Institute’s National Centre for AI and Data Science, stresses that, while “digital twins and modelling are helpful, the real world will always throw out problems that cannot be predicted”. What’s more, she states that the range of patient interactions and scenarios often progress and evolve over time, which is why Kourtzi and other researchers are developing systems for the real world.

For example, an Alzheimer’s Research UK project to collect masses of data to inform AI machine-learning models that can build algorithms able to detect early signs of dementia hopes to be years ahead of the game. “We know from research that some human behaviours and the way our bodies work subtly change in the very early stages of the disease, and that these changes might be unnoticeable to the human eye,” says Phipps.

“But they can be picked up by modern technologies such as smartphone apps and wearable devices,” she adds. As not everyone regularly goes for MRI scans, the EDoN project (Early Detection of Neurodegenerative diseases) is working to create Fitbit-style devices that can collect data from a patient’s daily activities. As Kourtzi explains, “The algorithms that have learned a lot of patterns can actually turn these warning signs into something more predictive. And that’s where they can make a difference before any symptom arises.

“We’ll see in years to come more and more people who are ready to use this kind of passive, constant, non-invasive tracking technology, like smartwatches, to understand their health,” she continues. “[These devices] normalise it and that makes people much more open to the diagnostic process as well.”

AI on the high street

Encouraging patients to get checked is a constant hurdle for healthcare professionals, but what if AI-powered diagnostic tools could be transported from a hospital setting to more routine check-ups? “The goal over the next few years is to rollout a diagnostic tool enabling high street opticians and clinicians to test if there’s an abnormal dark spot on the eye,” says Cordeiro, who adds that installation of such a system would ensure that only those who need to be referred to specialist actually are.

What’s more, she believes it would also reduce the number of false positives produced by current pressure tests, which, in turn, would free up experts for advanced cases. Kourtzi echoes this, explaining that she deploys a traffic light system where a green prognosis indicates the patient is stable and they do not require more invasive tests or hospitalisation. An amber prognosis signifies that the patient may simply require a lifestyle change or additional support from their own community with close follow-up appointments to monitor progress. According to Kourtzi, this would then enable healthcare professionals to focus on red prognoses, ensuring NHS resources are not misdirected to the wrong patients.

The EDoN team has completed a preliminary health economics assessment, where it evaluated utilisation rates and the length of patient stays in hospital. Encouragingly, the assessment has predicted a £5m reduction in costs over the next five years. However, researchers believe AI can do so much more than cut costs and save time. Many believe the ability to alter a patient’s prognosis is the real benefit here, which is why teams across the UK hope the technology becomes common place within the national healthcare system.

As Phipps concludes, “There are lots of areas of potential. People don’t realise that AI is being used in much the same way as so many other aspects of their lives that they take for granted.”

Patients are a virtue

As well as being put to good use in the diagnostics field of medicine, a separate branch of AI – natural language processing (NLP), which is concerned with the interactions between computers and human language, and in particular how to programme computers to process and analyse large amounts of natural language data – is being used by healthcare professionals to train the doctors of the future. At this year’s virtual Robotics & Innovation Conference, Dr Tony Young, national clinical lead for innovation at NHS England, successfully showcased the potential power of NLP via a simulated encounter with an AI-powered patient.“Many people have come up with the AI doctor, but actually, if you learn the questions that doctors are asking patients, perhaps you can generate a huge data set that will allow you to actually be a much better medical professional,” says Young.

During the live demo, Young was able to ask AI-powered patient ‘Sabine’ a series of questions about her symptoms and concerns. Her responses enabled Young to ask further probing questions to get to the root of the problem.According to Young, this solution could enable medical students to practice patient interactions without leaving the lecture hall or classroom.

“Imagine being able to train the next generation of doctors with patients who are loaded with all the right diagnostic criteria,” he says. “That right there unlocks artificial intelligence assistance, not just in the surgical procedure itself, but in the wider experience.” This is just one piece of AI kit that the NHS has invested in following funding from the UK Department of Health (DoH), which Young describes as “putting its money where its mouth is”.

The DoH has pledged £140m over the next four years for the NHS AI Lab, which, since September 2020, has awarded 80 grants to innovative AI start-ups related to healthcare. A third round was launched this June with Simon Stevens, chief executive of NHS England, stating: “Our message to developers worldwide is clear – the NHS is ready to help you test your innovations and ensure our patients are among the first in the world to benefit from new AI technologies.”

It’s good to talk

AI and NPL are also being deployed by Woebot Health, an Irish start-up developing a digital coach that helps people identify and cope with various mental health challenges, including stress, anxiety and depression. Joe Gallagher, vice president of product at Woebot Health, explains that the platform uses these techniques “so it can safely deliver the right intervention to the right person at the right time”. Its tailoring algorithm personalises the experience according to the user’s mood, the description of the problem at hand, and their progress through the programme to date.

In the quickly “shifting and episodic” world of mental health, Woebot believes digital therapeutics can integrate into the healthcare ecosystem to plug the gaps along the healthcare journey. Described as a “relational agent”, the platform works to build a trusted relationship with people, making it more than simply a chatbot.

“Woebot builds trust by building a bond with users,” says Gallagher. “Bond – or therapeutic alliance in clinical terms – reflects the rapport that develops between client and therapist. Bond gives rise to authentic disclosure and really meaningful engagement. The failure of technology to be able to develop a bond with people has been used as the reason why technology is never going to be as efficacious as humans.”

The company’s latest study, published in JMIR Formative Research and involving 36,070 users, looked to change this point of view. It highlighted that not only can Woebot quickly form a human level bond with people, but that bond also doesn’t appear to erode over time. “That was something that was not considered possible before,” says Gallagher. “The study marks a future in which digital mental health solutions can have a crucial place in a comprehensive ecosystem of care.”