Marketing for robotic ‘dogs’ plays up their potential for good, but the debate about lethal autonomous weapons suggests public anxiety is warranted, argues the University of Canterbury’s Jeremy Moses and Geoffrey Ford…

When it comes to dancing, pulling a sled, climbing stairs or doing tricks, “Spot” is definitely a good dog. It can navigate the built environment and perform a range of tasks, clearly demonstrating its flexibility as a software and hardware platform for commercial use.

Viral videos of Boston Dynamics’ robotic quadruped showcasing those abilities have been a key pillar of its marketing strategy. But earlier this year, when a New York art collective harnessed Spot to make a different point, the company was quick to deny its potential for harm.

The project, “Spot’s Rampage”, involved fitting a sample of the robotic dog with a paintball gun and allowing internet users to take remote control of the creature to destroy various art works in a gallery. It ended with Spot failing to function correctly, but Boston Dynamics used Twitter to strongly criticise the stunt:

“We condemn the portrayal of our technology in any way that promotes violence, harm, or intimidation. Our mission is to create and deliver surprisingly capable robots that inspire, delight and positively impact society.”

“Spot’s Rampage” was not the first to imagine the potential to use robot quadrupeds for violent ends. Spot also inspired the “Metalhead” episode of dystopian TV series Black Mirror, in which robot quadrupeds relentlessly pursue and kill human prey.

This is more than science fiction, however. A serious debate over the regulation or banning of lethal autonomous weapons systems is happening under the auspices of the United Nations, including how such systems should comply with existing humanitarian laws.

Robot anxiety

This contrast between the potentially violent robot of “Spot’s Rampage” and “Metalhead” and Boston Dynamics’ insistence that Spot be viewed as a force for good illustrates the tensions we have observed in our research.

As part of a larger project looking at debates on lethal autonomous weapons, we made a detailed study of 88,970 tweets about Spot from 2007 to 2020. The results indicate public responses have been significantly less positive than Boston Dynamics would like.

Despite the generally playful and peaceful presentation of Spot in Boston Dynamics’ videos, and obvious public interest and fascination with the technology, there is also recurring scepticism and concern from Twitter users.

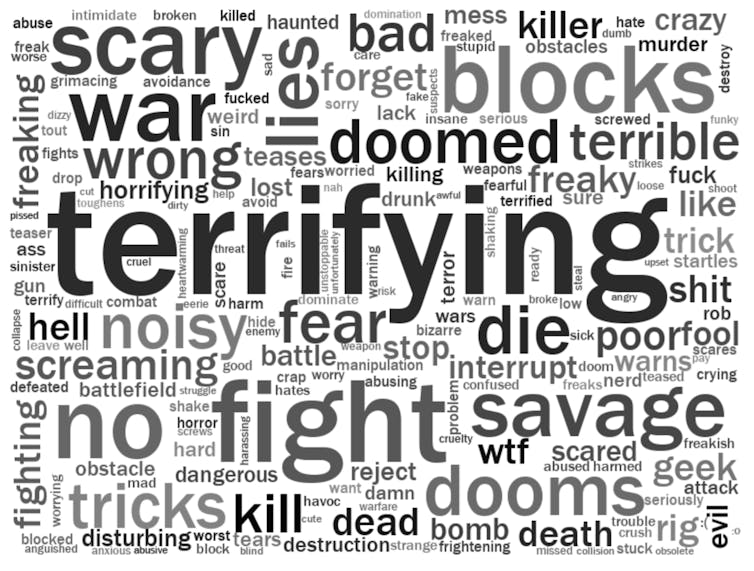

The word cloud below maps the most commonly used negative language in those tweets. Words such as “terrifying”, “war” and “doomed” are noticeably prominent.

Analysis of the use of emotive words shows recurring features of conversations about Spot: dark humour, sarcasm and suspicion about the intended uses of the technology. Associations between Boston Dynamics, its previous owner Google, the military and killer robots portrayed in popular culture (such the Terminator films) also recur.

Boston Dynamics CEO Robert Playter has dismissed such negative public reactions as “fiction” grounded in the “rogue robot story” and misunderstandings of the technology.

Depictions of robots that will not harm humans and even save lives have been a mainstay of public messaging by both Boston Dynamics and the US military’s Defense Advanced Research Projects Agency. From fighting Covid-19 to search and rescue to taking soldiers out of harm’s way, the potential humanitarian applications of robotic quadrupeds in civilian and military service are emphasised.

Spot is going to work in the Construction and Real Estate industries to improve job site visibility and planning. See how Spot makes a difference on site. https://t.co/zU2SzXmLbP pic.twitter.com/96DYG1U8S2

— Boston Dynamics (@BostonDynamics) April 28, 2021

Military connections

But negative reactions should not be too easily discounted. Boston Dynamics’ technology was advanced through military funding, and military applications have been seen as a key market. In 2019, company founder and then CEO Marc Raibert signalled Boston Dynamics “will probably have military customers”.

And in the month following the “Spot’s Rampage” prank, the robot was tested by the NYPD and by French armed forces in combat exercises – although public backlash against the NYPD trials have brought an early end to its contract with Boston Dynamics.

Meanwhile, other robotics companies, including Boston Dynamics’ competitor Ghost Robotics, have actively and successfully sought contracts with the US military.

Robots used in US policing are not weaponized. Yet. Let’s keep it that way: Legislate to prohibit them from being armed – @hrw https://t.co/hmoC5F8EmR @BanKillerRobots @AOC pic.twitter.com/b0ljivr4qh

— Mary Wareham (@marywareham) March 24, 2021

Ghost Robotics CEO Jiren Parikh has said its Vision 60 quadruped “can be used for anything from perimeter security to detection of chemical and biological weapons to actually destroying a target”.

More recently, Ghost Robotics released footage of its robot quadruped firing a projectile into a target to demonstrate its potential use for bomb disposal.

The apparent flexibility of these machines, which can carry different payloads and be fitted and programmed for different missions, suggests a range of potential applications, including as lethal autonomous weapons.

As long as the military end-use remains uncertain and the technology itself is still developing, we should remain wary of attempts by developers, marketers and military advocates to shape and manage public sentiment with the promise of “saving lives”.![]()

This article is authored by Jeremy Moses, associate professor in International Relations, University of Canterbury and Geoffrey Ford, lecturer in Digital Humanities / Postdoctoral Fellow in Political Science and International Relations, University of Canterbury. This article is republished from The Conversation under a Creative Commons license. Read the original article.